Final Report

This is the report for final competition of EE346 Mobile RobotNavigation and Control.

Table of contents

Introduction

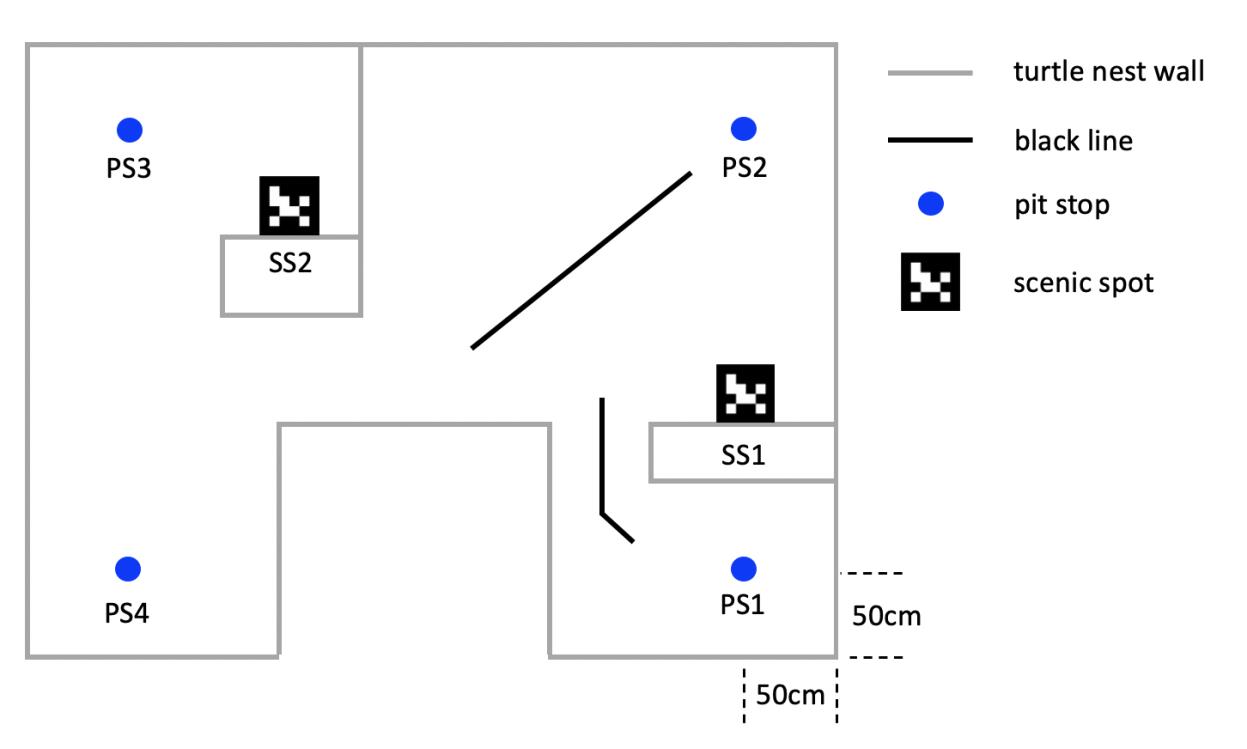

The final competition project integrates the knowledge of navigation and visual recognition learned in this semester into a system. The whole competition project consists of two parts. The whole competition map is shown in Figure 1.

The first part of the competition is under the premise of unlimited time. Turtlebot3 starts from PS1 (pit stop), and it has to stop at each PS point for one second in sequence or when the Turtlebot3 arrives, and make sound to indicate that it has reached the corresponding PS point. The important thing to note here is that any part of the Turtlebot3 body must occupy the PS point for it to appear that Turtlebot3 has reached the PS point. In addition, Turtlebot3 also needs to identify ArUco marker for two Scenic spots (SS) in the first part of the competition. Note that Turtlebot3 does not give external help in identifying SS. What’s more, Turtlebot3 is not allowed to touch the wall during the game.

The second part of the competition is under the premise of 3-minute time. Turtlebot3 also starts on PS1 and it can try any task of PS1 or SS to earn points, but turtlebot3 must alternate tasks between the left half and the right half of the turtle nest. Specifically, Turtlebot3 can earn points by completing one or two tasks in half of the environment (for example, PS2 only or PS2+SS1), and then it must switch to the other half to complete additional tasks (for example, PS3 only or PS3+SS2). The requirements for completion of each task are the same as in Part 1.

Experimental Platform

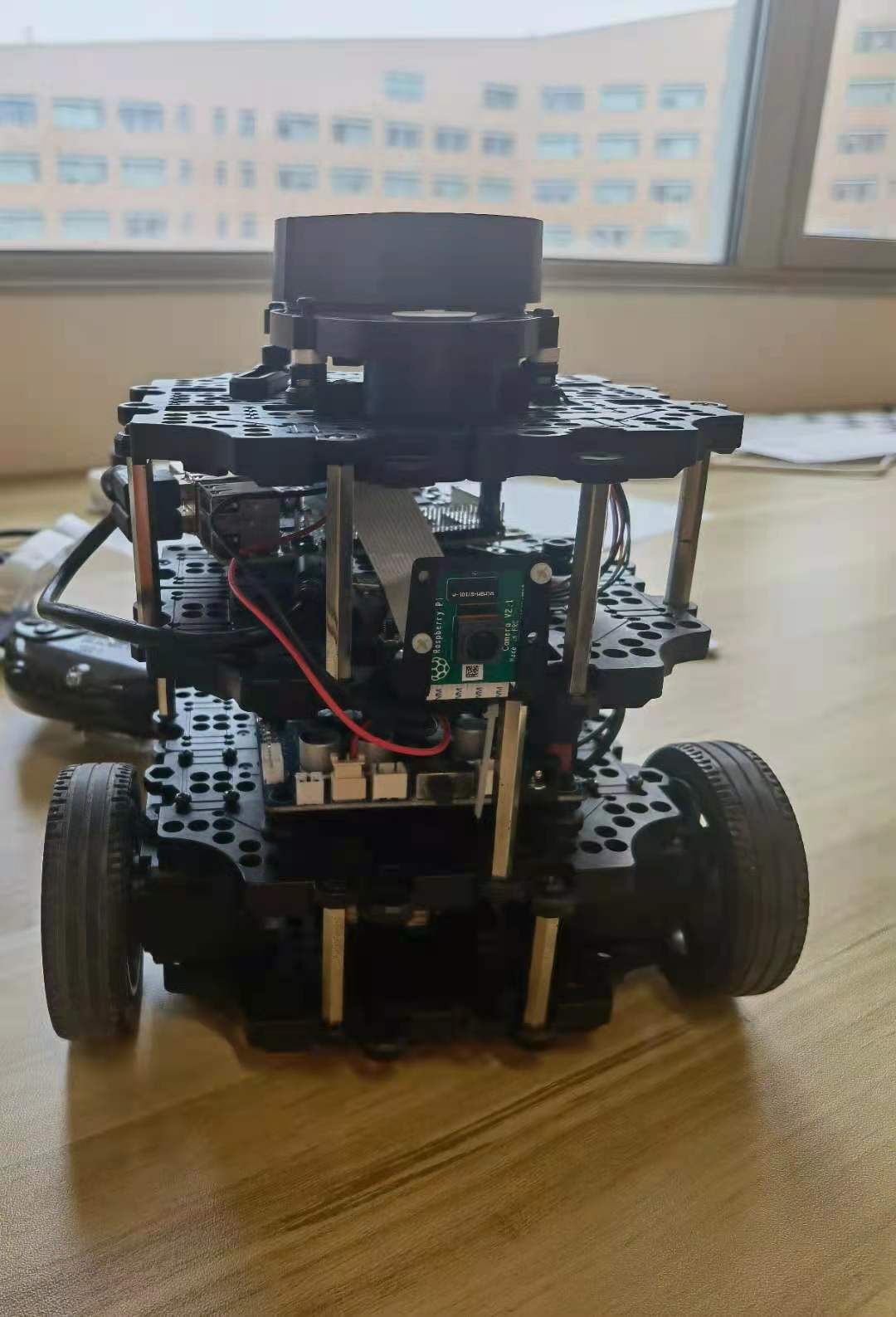

The mobile robot used for the whole competition project is Turtlebot3, which is a small, low-cost, fully programmable, ROS-based mobile robot. For Turtlebot3 related components and a user manual, click on the link.The physical diagram of the Turtlebot3 is shown in Figure 2.

When using visual recognition of AR marker, Turtlebot3 needs to transmit the collected video to the computer end, and then carry out relevant processing on the laptop. The laptop used in this competition is Lenovo Y7000P 2020, with RTX2060 GPU and I7-10750H CPU. The photo of the Lenovo Y7000P is shown in Figure 3.

We mentioned earlier that Turtlebot3 is a mobile robot based on ROS. ROS is the abbreviation of Robot Operating System. ROS is a highly flexible software architecture for writing robot software programs. The main goal of ROS is to provide support for code reuse for robotics research and development. ROS is a framework of distributed processes (i.e., “nodes”) encapsulated in packages and feature packs that can be easily shared and distributed. ROS also supports a federated system similar to a code repository, which also enables collaboration and delivery of projects. This design allows the development and implementation of a project to be completely independent (ROS free) from the file system to the user interface. At the same time, all projects can be put together by ROS’s basic tools.

Build the Map

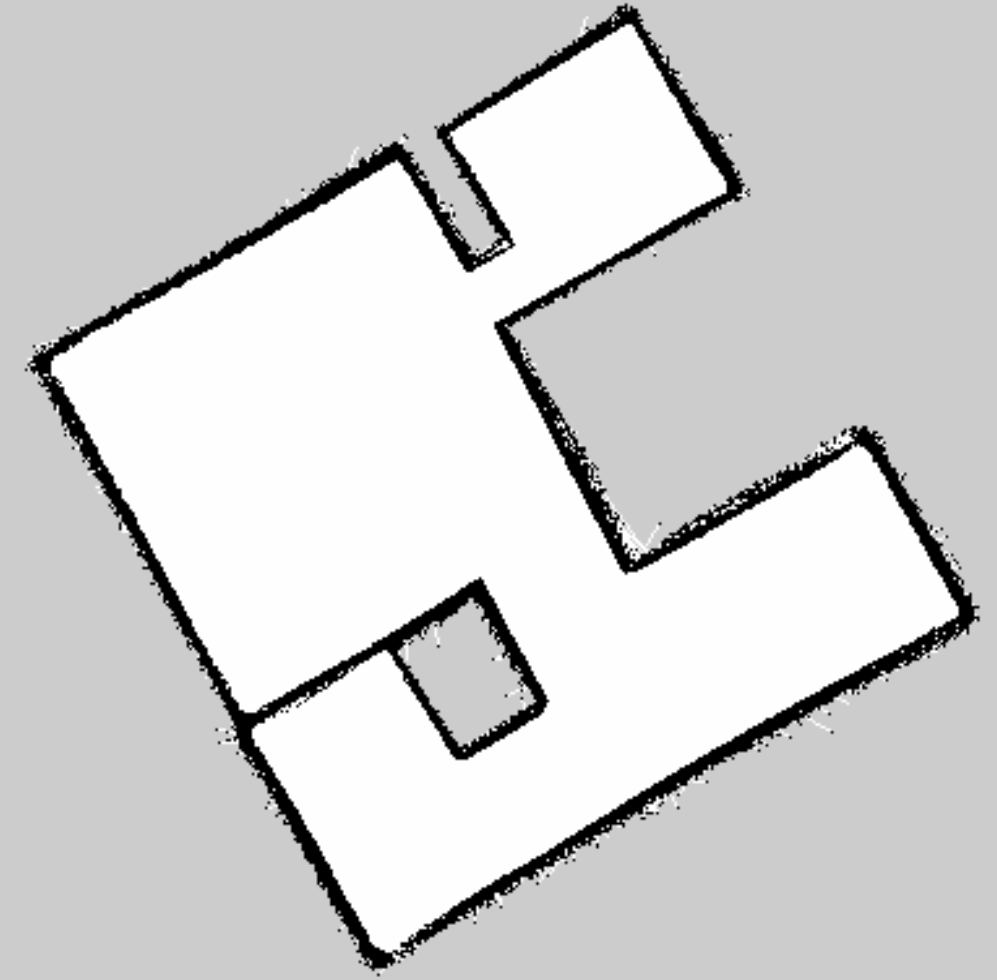

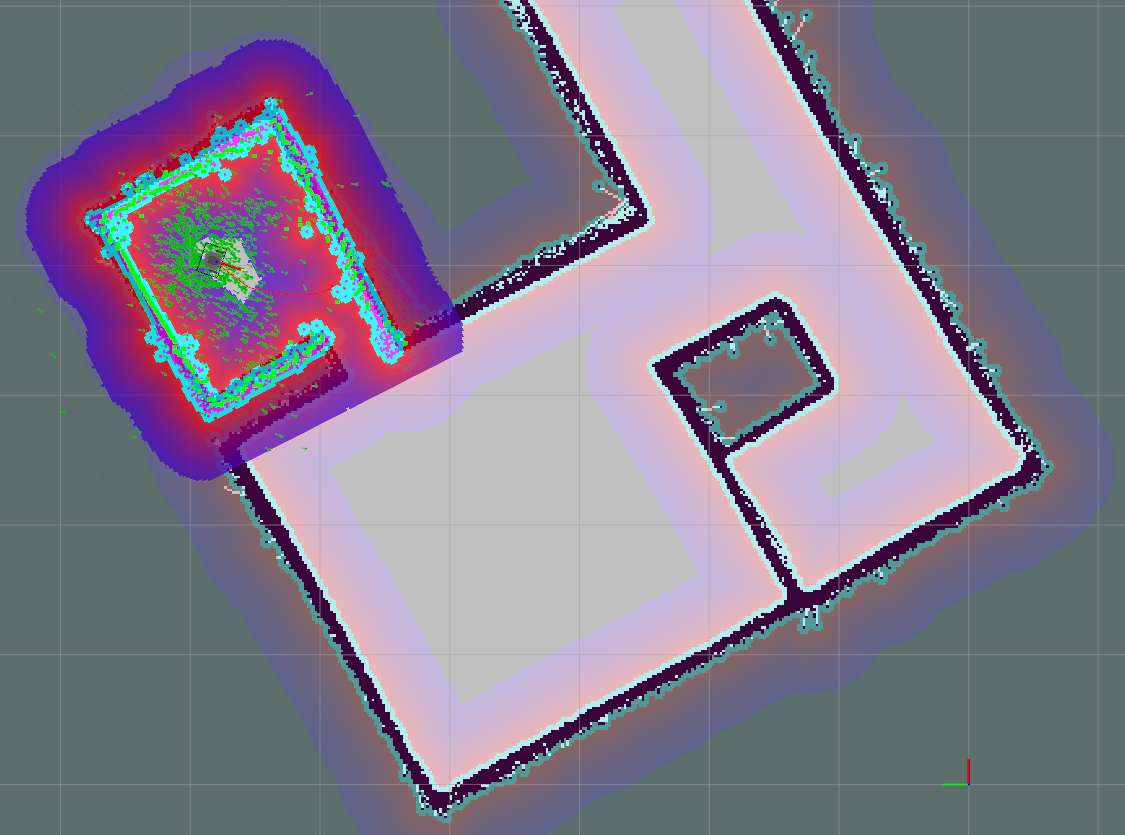

We build the map using GMapping which use sensor data from laser scan and odometry as input. We used the remote control to control the Turtlebot3 to complete a loop along the wall at a slower speed. The distance between the Turtlebot3 and the wall is about 50cm.

We adjust the default gmapping config file in Turtlebot3 SLAM package. Set the resolution to 0.02m.

xmin: -5.0

ymin: -5.0

xmax: 5.0

ymax: 5.0

delta: 0.02

Use roslaunch turtlebot3_slam turtlebot3_slam.launch start the GMapping Node and rosrun map_server map_saver -f ~/map save the Map.

After many tries, we get the map below which looks no bad.

Navigation

We use Adaptive Monte Carlo Localization(AMCL) do the navigation. The navigation node has been provided by Turtlebot3 package. we just launch the this node with the pre-built map.

roslaunch turtlebot3_navigation turtlebot3_navigation.launch map_file:=$HOME/map.yaml

Then we need estimate the initial pose in Rviz.

Our navigation program is written in C++ base on the code in ros navigation wiki tutorials.

#include <ros/ros.h>

#include <move_base_msgs/MoveBaseAction.h>

#include <actionlib/client/simple_action_client.h>

typedef actionlib::SimpleActionClient<move_base_msgs::MoveBaseAction> MoveBaseClient;

int main(int argc, char** argv){

ros::init(argc, argv, "simple_navigation_goals");

//tell the action client that we want to spin a thread by default

MoveBaseClient ac("move_base", true);

//wait for the action server to come up

while(!ac.waitForServer(ros::Duration(5.0))){

ROS_INFO("Waiting for the move_base action server to come up");

}

move_base_msgs::MoveBaseGoal goal;

//we'll send a goal to the robot to move 1 meter forward

goal.target_pose.header.frame_id = "base_link";

goal.target_pose.header.stamp = ros::Time::now();

goal.target_pose.pose.position.x = 1.0;

goal.target_pose.pose.orientation.w = 1.0;

ROS_INFO("Sending goal");

ac.sendGoal(goal);

ac.waitForResult();

if(ac.getState() == actionlib::SimpleClientGoalState::SUCCEEDED)

ROS_INFO("Hooray, the base moved 1 meter forward");

else

ROS_INFO("The base failed to move forward 1 meter for some reason");

return 0;

}

We just change the goal.target_pose.pose to the position of each PS and SS point. According the position of the corner in the map, we can calculate the PS position that 50cm away from both walls.

from math import cos, sin

ps2_radians = 0.559347

x2 = -0.03884 + 0.5 * cos(ps2_radians) + 0.5 * sin(ps2_radians)

y2 = 4.08117 + 0.5 * sin(ps2_radians) - 0.5 * cos(ps2_radians)

ps3_radians = 0.5759972

x3 = 2.5153 + 0.5 * cos(ps3_radians) - 0.5 * sin(ps3_radians)

y3 = -0.448662 + 0.5 * sin(ps3_radians) + 0.5 * cos(ps3_radians)

ps4_radians = 0.5759972

x4 = 6.87578 - 0.5 * cos(ps4_radians) - 0.5 * sin(ps4_radians)

y4 = 2.19974 - 0.5 * sin(ps4_radians) + 0.5 * cos(ps4_radians)

ArMarker position can be read from topic /ar_pose_marker. If the marker is found, the computer will say the ID this marker.

boost::shared_ptr<ar_track_alvar_msgs::AlvarMarkers const> msg;

msg = ros::topic::waitForMessage<ar_track_alvar_msgs::AlvarMarkers>("/ar_pose_marker", ros::Duration(1.0));

if (msg) {

for (auto &marker : msg->markers) {

int id = marker.id;

double dis = marker.pose.pose.position.z;

ROS_INFO("find marker id: %d, distance %f", id, dis);

if (dis < 2) {

std::string words = std::to_string(id);

sc.say(words);

status = PS4;

}

}

}

For SS1, we want the robot to find marker as soon as possible, therefore, we let the robot approach marker gradually and move to PS3 as soon as the marker is found. For SS2, we just let the robot move to a certain position and recognize the marker.

Section 1 flow chart:

Section 2 flow chart:

Result

Section 1

Our Turtlebot knocked into the wall when going to PS4 in first round. It could be because the rotation speed of the two wheels of our robot is not consistent which lead robot deviate from the global planning path. In second round, we added middle position between SS2 and PS4. However, the program after robot reached to PS4 will break and we just copied the code. So our robot just stopped at the middle position and we only got 37 points.

Section 2

In first round our robot only got 38 points. In second round, we adjusted the max speed from 0.22 to 0.18 in dwa_local_planner so the robot moved more smoothly and final we got 60 points.

Conclusion

In this competition, we learned how to use GMapping to build the map and use AMCL to do navigation. This competition also taught us a lesson that never forget to check the code.

Contribution: Lingxiao Meng(Navigation Code, Webpage), Can He(Build the Map, Report).